Compressed-Language Models for Understanding Compressed File Formats: a JPEG Exploration

Abstract

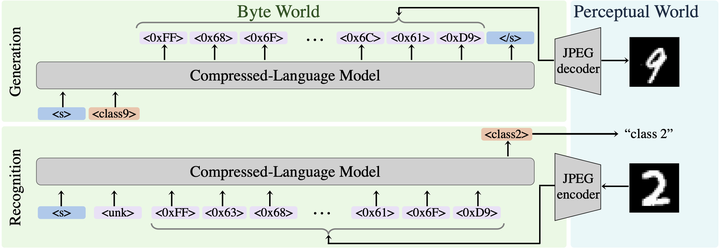

This study investigates whether Compressed-Language Models (CLMs), i.e. language models operating on raw byte streams from Compressed File Formats~(CFFs), can understand files compressed by CFFs. We focus on the JPEG format as a representative CFF, given its commonality and its representativeness of key concepts in compression, such as entropy coding and run-length encoding. We test if CLMs understand the JPEG format by probing their capabilities to perform along three axes: recognition of inherent file properties, handling of files with anomalies, and generation of new files. Our findings demonstrate that CLMs can effectively perform these tasks. These results suggest that CLMs can understand the semantics of compressed data when directly operating on the byte streams of files produced by CFFs. The possibility to directly operate on raw compressed files offers the promise to leverage some of their remarkable characteristics, such as their ubiquity, compactness, multi-modality and segment-nature.

BibTex

@article{perez2024compressed,

title={Compressed-Language Models for Understanding Compressed File Formats: a JPEG Exploration},

author={P{\'e}rez, Juan C and Pardo, Alejandro and Soldan, Mattia and Itani, Hani and Leon-Alcazar, Juan and Ghanem, Bernard},

journal={arXiv preprint arXiv:2405.17146},

year={2024}

}