Abstract

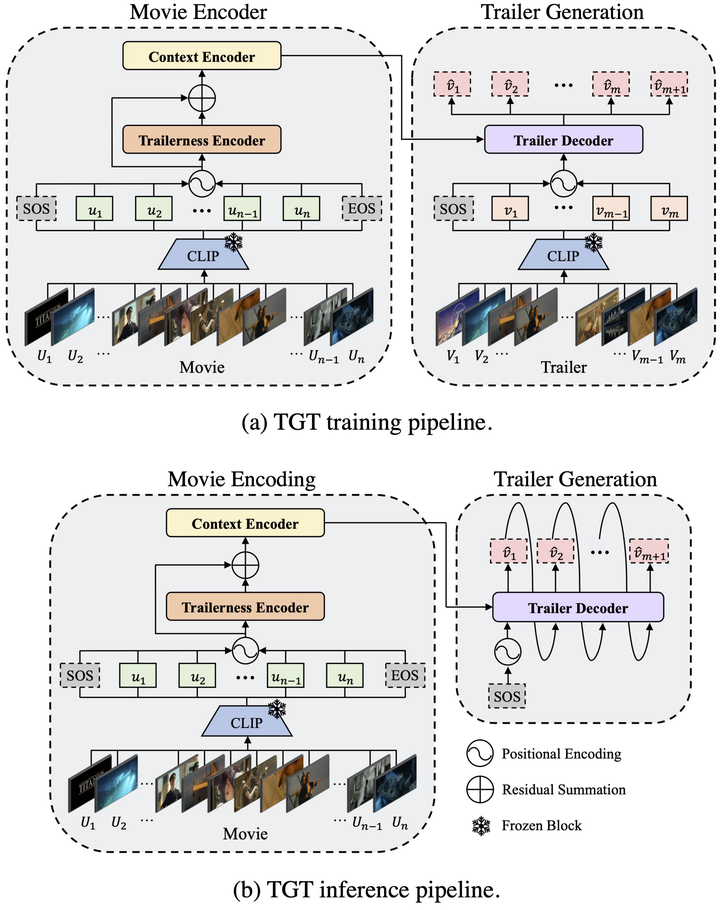

Movie trailers are an essential tool for promoting films and attracting audiences. However the process of creating trailers can be time-consuming and expensive. To streamline this process we propose an automatic trailer generation framework that generates plausible trailers from a full movie by automating shot selection and composition. Our approach draws inspiration from machine translation techniques and models the movies and trailers as sequences of shots thus formulating the trailer generation problem as a sequence-to-sequence task. We introduce Trailer Generation Transformer (TGT) a deep-learning framework utilizing an encoder-decoder architecture. TGT movie encoder is tasked with contextualizing each movie shot representation via self-attention while the autoregressive trailer decoder predicts the feature representation of the next trailer shot accounting for the relevance of shots' temporal order in trailers. Our TGT significantly outperforms previous methods on a comprehensive suite of metrics.

BibTex

@inproceedings{argaw2024towards,

title={Towards Automated Movie Trailer Generation},

author={Argaw, Dawit Mureja and Soldan, Mattia and Pardo, Alejandro and Zhao, Chen and Heilbron, Fabian Caba and Chung, Joon Son and Ghanem, Bernard},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={7445--7454},

year={2024}

}